The Big Blog Theory

The science behind the science

S0704: The Raiders Minimization

November 10, 2013

I waited to post to see if anyone would find the Easter Egg on

the whiteboard of tonight’s show. It turns out that executive

producer Steve Molaro commandeered one of tonight’s whiteboards. (Well

“commandeered” may not be quite the right word for someone who takes

what he has full rights to.)

And truth be told I was frightened anyone would ever see it:

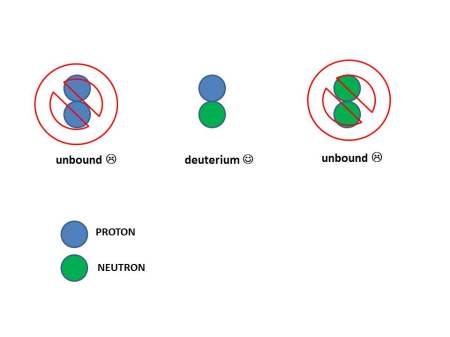

If that is not clear enough, then consider the title of this week’s episode. Now having Mr. Molaro’s permission to finally reveal its source, I ask you to look carefully at the critical scene of The Raiders of the Lost Ark.

And truth be told I was frightened anyone would ever see it:

If that is not clear enough, then consider the title of this week’s episode. Now having Mr. Molaro’s permission to finally reveal its source, I ask you to look carefully at the critical scene of The Raiders of the Lost Ark.

But as impossible as it may seem, it appears

the folks at “Raiders of the Lost Ark” must not have had a science

consultant — since these equations make no sense. If any of the BBT

whiteboards’ dozens of fans had noticed it, they might blame the error

on the BBT’s science consultant.

We can tell from the words and symbols this

is about projectile motion. The problem all started when I tried to

figure out what that equation must have meant.

In physics we often first understand equations through their units. The variable h is the height of the projectile and is measured as a length, typically in meters. That’s no problem with the first factor v2/2g because that has units (meters/second)2/(meters/second2). Voila meters. The second factor has two terms. The first term,”1“, has no units so we are still in meters. The painful part comes with the next term a2g2/v4. Put in the units and the meter4 cancels meter4. So far so good. But the seconds do not cancel and you are left with 1/(seconds)4.

You can’t add that to something unitless. And even if you could, it

would change the answer from the nice meters for height something with

crazy units: meters/(seconds)4.

So it was to my great relief that nobody

found this. I did not want to have to answer piles of hate mail or

endure the scorn of comments on IMDB. A number of articles have been

written about TBBT ruining Raiders of the Lost Ark. But until now, those people did not know what must be the real reason why.

S07E03: The Scavenger Vortex

October 3, 2013

Today we learned from Sheldon Cooper,

Oxygen makes up nearly half (47%) of the Earth’s crust by weight.

Not oxygen the gas, but oxygen in the form of silicates, that is

minerals with an atom of silicon bound tightly to oxygen atoms,

constituting a long list of minerals found in the Earth’s crust. Content in knowing this fact, you may be forgiven the feeling of walking on air.

For those of us that live and breathe on Earth we are accustomed to finding our oxygen in the atmosphere. But that is a relatively late arrival. Oxygen came to earth mostly already bound up in minerals and and in water. Even as early life formed, any excess oxygen produced by early photosynthesis such as by cyanobacteria would quickly bond to other minerals it would encounter, leaving the atmosphere mostly free of oxygen. Only about 2.5 billion years ago did photosynthesis produce oxygen faster than it could be absorbed, causing the fraction of free oxygen to rise, known as “Great Oxygenation Event”. Within the last billion years, oxygen had little place to go and its portion of the atmosphere increase even further. Oxygen in the atmosphere has only been common for the last half of the Earth’s history.

So what is this crust? Originally the Earth formed from the coalescing debris of the solar system. Dense elements, metals such as iron, fell to the center, or core while less dense, rocky materials floated on top of it. The very surface, just the outer 3-6 miles of the land below the oceans, or outer 10-60 miles of continent, have a particularly low density compared to the rest of the Earth. That may sound deep but the outer skin of an apple is relatively a bit thicker than most of the crust is to the size of the Earth.

The only reason geologists define a crust at all is that there is a clear separation in density between it and the denser rock in the region below it, called the mantle. The separation of this region form the denser rocky mantle below is called the Moho. So named probably because “Moho” is easier to say than “the Mohorovičić discontinuity“. In 1909, a Croatian geologist, Andrija Mohorovičić, was studying the speed of and direction of seismic waves and deduced that such a division must exist.

So if so thin, why not drill down and go look for the transition? Over fifty years ago, geologists thought this could shed light on the controversy if continental drift existed. (Nowadays, the largest arrays of radio telescopes can not only detect continents moving within about a week, they will not work if they do not correct for it.) Such a deep hole was the grand aim of one of the world’s first “Big Science” projects, “Project Mohole“. Starting in 1961, geologists found one of the thinnest spots in the Earth’s crust, from a floating platform in the Ocean off of Mexico, and started drilling.

The geologists never made it past 601 feet before the project was canceled. Winning a battle of the cold war, subsequently the Soviets managed to drill down nearly 7 miles on land creating the Kola Superdeep Borehole. But since this was on the continental shelf, it was nowhere deep enough to reach the Moho. The Soviet record was later beaten by a whole 100 feet, but for good reason: to extract oil from the Persian Gulf.

A Japanese project Chikyū aims to drill in a thin spot in the ocean where the mantle could be just over 4 miles below the surface. Their drill rig was damaged by an Earthquake and the project was delayed.

To this day no scientific effort has reached the Moho. It would be a

lot easier if all this oxygen were not bound to silicon. But then we

would have other problems. Let’s get our moho workin’.

“Silicon — one of the most common elements in the Earth’s crust”True. But “one of”? Silicon is merely the second most common element in the Earth’s crust, weighing in at 28%. We are here to resolve the suspense. What is actually the most common element in the Earth’s crust?

One

might guess a hard element holds you up when walking on the Earth.

Iron? Magnesium? Silicon? Actually, the most abundant element in the

Earth’s crust–the element holding you up when you stand on terra firma–is oxygen.

For those of us that live and breathe on Earth we are accustomed to finding our oxygen in the atmosphere. But that is a relatively late arrival. Oxygen came to earth mostly already bound up in minerals and and in water. Even as early life formed, any excess oxygen produced by early photosynthesis such as by cyanobacteria would quickly bond to other minerals it would encounter, leaving the atmosphere mostly free of oxygen. Only about 2.5 billion years ago did photosynthesis produce oxygen faster than it could be absorbed, causing the fraction of free oxygen to rise, known as “Great Oxygenation Event”. Within the last billion years, oxygen had little place to go and its portion of the atmosphere increase even further. Oxygen in the atmosphere has only been common for the last half of the Earth’s history.

So what is this crust? Originally the Earth formed from the coalescing debris of the solar system. Dense elements, metals such as iron, fell to the center, or core while less dense, rocky materials floated on top of it. The very surface, just the outer 3-6 miles of the land below the oceans, or outer 10-60 miles of continent, have a particularly low density compared to the rest of the Earth. That may sound deep but the outer skin of an apple is relatively a bit thicker than most of the crust is to the size of the Earth.

The only reason geologists define a crust at all is that there is a clear separation in density between it and the denser rock in the region below it, called the mantle. The separation of this region form the denser rocky mantle below is called the Moho. So named probably because “Moho” is easier to say than “the Mohorovičić discontinuity“. In 1909, a Croatian geologist, Andrija Mohorovičić, was studying the speed of and direction of seismic waves and deduced that such a division must exist.

So if so thin, why not drill down and go look for the transition? Over fifty years ago, geologists thought this could shed light on the controversy if continental drift existed. (Nowadays, the largest arrays of radio telescopes can not only detect continents moving within about a week, they will not work if they do not correct for it.) Such a deep hole was the grand aim of one of the world’s first “Big Science” projects, “Project Mohole“. Starting in 1961, geologists found one of the thinnest spots in the Earth’s crust, from a floating platform in the Ocean off of Mexico, and started drilling.

The geologists never made it past 601 feet before the project was canceled. Winning a battle of the cold war, subsequently the Soviets managed to drill down nearly 7 miles on land creating the Kola Superdeep Borehole. But since this was on the continental shelf, it was nowhere deep enough to reach the Moho. The Soviet record was later beaten by a whole 100 feet, but for good reason: to extract oil from the Persian Gulf.

A Japanese project Chikyū aims to drill in a thin spot in the ocean where the mantle could be just over 4 miles below the surface. Their drill rig was damaged by an Earthquake and the project was delayed.

If scientists could make it just about 0.25% as far as Brendan Fraser, we could have reached the Moho.

S0701: The Hofstadter Insufficiency

September 26, 2013

This is the long awaited moment for fans of The Big Bang

Theory. The show has returned for another season with all new

episodes. Or is it the even longer-awaited return of The Big Blog Theory, giving you “the science behind the science”?

Our season opens with Leonard at sea. He left his girlfriend and his friends to go on a scientific expedition. Conveniently for the story, he left on a research vessel to the North Sea where no one could visit him. But as we see, that story posed an inconvenient problem for the science….

Scientists, even physicists, of nearly every stripe at some point

find themselves doing “field work”. I have traveled with my physics

students to Arctic and Antarctic research stations for weeks and even

months at a time. We’ve also gone several thousand feet underground

into pure salt mines. We go to factories we never would have known

existed to check on the critical parts of our detectors — the latest

being one that could stamp out 12 foot by 5 foot circuit boards on what

amounted to a giant hydraulic panini press. Other physicists and

astrophysicists travel to mountaintops to build and use telescopes.

Physicists go just about anywhere you can and maybe can’t imagine. If

you thought a career in science was purely an office job, you are

missing some data. The life of science can be a life of adventure.

Even if not going into a remote location, or even out-of-doors, many scientists travel to national laboratories for their data. Perhaps my most dangerous assignment of all was back when I was still a graduate student. I worked in decaying old trailers on site of the Fermi National Accelerator Laboratory, complete with dead racoons on the roof, overflowing toilets, and with (being physicists) nobody-knew-what growing behind the paneling and carpets. Luckily, nobody contracted cholera.

Back to Leonard. Conducting field work on a research vessel is not uncomon. Actually, one of my former Ph.D. students is now a researcher at the Naval Research Lab who goes to sea often with instruments he built. A research vessel on the North Sea was a perfect way for the writers to send off Leonard out-of-reach over the summer break.

But then we had a problem. Leonard was working with Stephen Hawking, the physicist whom Penny calls the “dude who invented time”. More specifically, Hawking is one of the world’s leading experts on Black Holes and the physics that governs them, Einstein’s Theory of General Relativity. But there are no black holes to visit by a research vessel at sea. Nothing beyond Newton’s laws of physics are required to understand the sea. So maybe it was hopeless, what could Stephen Hawking possibly care about any research project Leonard would be conducting in the North Sea. Oceanography and marine biology could not be farther from General Relativity. But luckily there was one thing that fit the bill.

Nobody can visit a black hole, nor is anyone ever likely to. But theories must be challenged with data to find out which, if any, are right. It turns out that the mathematics of general relativity have something in common with the mathematics of fluid flows. One of Hawking’s famous predictions is that the previously held idea that nothing ever escapes a black hole had a loophole, they could eventually evaporate through a process conveniently called Hawking radiation. Because the process is quantum mechanical, it carries hope that it might lead us to the elusive theory of gravity that is also fully consistent with quantum mechanics.

Nobody has ever seen this radiation, but over thirty years ago William Unruh realized that supersonic fluids shared some mathematics in common with black holes. Specifically, he showed that the same arguments that led Hawking to predict radiation from black holes lead one to predict a certain spectrum of sound waves to be emitted from supersonic fluids. Even many of the quantum effects are there, with units of sound waves called phonons, in analogy to units of light being called photons. Decades later, Leonard is putting this to the test on the research vessel. Tune in to find out if the results were “promising” or not.

Now the biggest problem. The script coordinator asked me how to pronounce Unruh. But I’d never met him. I asked several of my theoretical colleagues who were of course sure of the answer, as theorists always are. But the problem was each was sure of a different pronunciation. In desperation, I knew I would have to contact Professor Unruh. I admit I was a bit nervous. I considered calling his answering machine at 4 in the morning hoping he would say his name. But since he’s a physicist there was a good chance he would be in his office. So I asked him. For the record it is UN-roo and he is very nice.

Our season opens with Leonard at sea. He left his girlfriend and his friends to go on a scientific expedition. Conveniently for the story, he left on a research vessel to the North Sea where no one could visit him. But as we see, that story posed an inconvenient problem for the science….

Even if not going into a remote location, or even out-of-doors, many scientists travel to national laboratories for their data. Perhaps my most dangerous assignment of all was back when I was still a graduate student. I worked in decaying old trailers on site of the Fermi National Accelerator Laboratory, complete with dead racoons on the roof, overflowing toilets, and with (being physicists) nobody-knew-what growing behind the paneling and carpets. Luckily, nobody contracted cholera.

Back to Leonard. Conducting field work on a research vessel is not uncomon. Actually, one of my former Ph.D. students is now a researcher at the Naval Research Lab who goes to sea often with instruments he built. A research vessel on the North Sea was a perfect way for the writers to send off Leonard out-of-reach over the summer break.

But then we had a problem. Leonard was working with Stephen Hawking, the physicist whom Penny calls the “dude who invented time”. More specifically, Hawking is one of the world’s leading experts on Black Holes and the physics that governs them, Einstein’s Theory of General Relativity. But there are no black holes to visit by a research vessel at sea. Nothing beyond Newton’s laws of physics are required to understand the sea. So maybe it was hopeless, what could Stephen Hawking possibly care about any research project Leonard would be conducting in the North Sea. Oceanography and marine biology could not be farther from General Relativity. But luckily there was one thing that fit the bill.

Nobody can visit a black hole, nor is anyone ever likely to. But theories must be challenged with data to find out which, if any, are right. It turns out that the mathematics of general relativity have something in common with the mathematics of fluid flows. One of Hawking’s famous predictions is that the previously held idea that nothing ever escapes a black hole had a loophole, they could eventually evaporate through a process conveniently called Hawking radiation. Because the process is quantum mechanical, it carries hope that it might lead us to the elusive theory of gravity that is also fully consistent with quantum mechanics.

Nobody has ever seen this radiation, but over thirty years ago William Unruh realized that supersonic fluids shared some mathematics in common with black holes. Specifically, he showed that the same arguments that led Hawking to predict radiation from black holes lead one to predict a certain spectrum of sound waves to be emitted from supersonic fluids. Even many of the quantum effects are there, with units of sound waves called phonons, in analogy to units of light being called photons. Decades later, Leonard is putting this to the test on the research vessel. Tune in to find out if the results were “promising” or not.

Now the biggest problem. The script coordinator asked me how to pronounce Unruh. But I’d never met him. I asked several of my theoretical colleagues who were of course sure of the answer, as theorists always are. But the problem was each was sure of a different pronunciation. In desperation, I knew I would have to contact Professor Unruh. I admit I was a bit nervous. I considered calling his answering machine at 4 in the morning hoping he would say his name. But since he’s a physicist there was a good chance he would be in his office. So I asked him. For the record it is UN-roo and he is very nice.

S05E03: The Pulled Groin Extrapolation

September 30, 2011

An now, the must-watch exciting conclusion of the axion calculation saga on the whiteboards.

Last week we saw two episodes where upon our heroes’ whiteboards unfolded about making an exciting new particle, “the axion” on Earth. Could axions be made inside an artificial Sun made by the National Ignition Facility? This summer, I was excited about this, but as you already know by now from reading tonight’s whiteboards, I made a terrible, terrible mistake.

To estimate the rate of axions I used the relative power produced by the Sun versus the small compressed sample produced at the National Ignition Facility. In both cases a material is made so hot that atoms are ripped apart into their constituents: electrons and nuclei. Such a gas is called a plasma, and plasma is sometimes called “the fourth state of matter” as it is step hotter than just ordinary gas. It is not unfamiliar. The glowing orange material in a neon sign is a plasma.

I was comparing the large far-away plasma in the core of the Sun to

the tiny, but close, plasma created in the lab. It initially looked

like the laboratory won. To understand what was wrong, you first have

to understand how the Sun produces its energy.

The strong nuclear interaction likes to bind protons and neutrons together. And Martha Stewart says, “It’s a good thing”. Without it, the only atoms we would ever have are hydrogen. If hydrogen were our only element, we’d have an unperiodic periodic table–with only one entry, hydrogen. In real life, the nucleus of every atom is held together by this force. And its strength is impressive. For example, in helium and every element heavier the protons are repelling each other. Same-sign charges (in the protons’ case, both positive) repel with a force increasing as the square of their distance from each other decreases. A nucleus is extremely small, and those protons are so close they want to fly apart, badly. The strong interaction overcomes this repulsion and nuclei stay bound. That’s why it is called the “strong interaction” (or “strong force”).

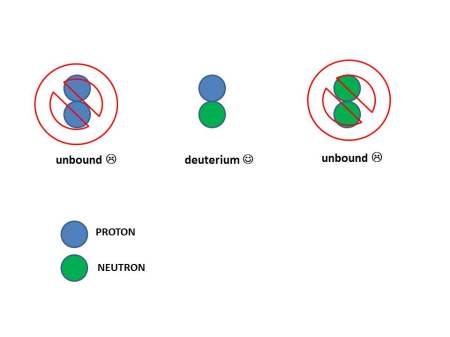

But there’s a wrinkle. If you try to bring just two together (either protons or neutrons) there is only one combination that is stable: a pairing of one neutron and one proton. The pairing of two protons or two neutrons is not.

It might seem the reason why two protons are not bound is because of

their electric repulsion. But that would not explain why two neutrons

are also not bound. The answer really lies within the constraints of

the quantum mechanics of identical particles. It turns out that the

only way to put two identical neutrons or protons together is if they

have angular momentum, but then they are not bound. We teach our

physics majors all about this at UCLA in our introductory quantum

mechanics class. If you can take a quantum mechanics class, I highly

recommend it.

The core of the Sun is full of protons but no free neutrons. So the only way to make energy from them is to convert one of those protons into a neutron so you can bind them. This bound state of a neutron and proton is still chemically hydrogen, but it has an extra neutron so it is called “heavy hydrogen”, or more technically deuterium. That’s the same “heavy” of “heavy water”. “Heavy” water is made with “heavy hydrogen”. But the reaction does not conserve electric charge so you need a light positively charged particle to fly away, and it turns out to be the anti-matter partner of an electron, which has a positive charge (e+), and so is a “positron”. But that introduces a new problem. A positron is a type of particle called “lepton” and for reasons not yet understood, you can’t vioate the number of leptons. So you also need a neutrino (ν) to be made as well to not create any net leptons. (These neutrinos were detected from the Sun over the last few decades. They changed our entire understanding of neutrinos but that’s a story for another day.) It’s easiest to see graphically:

Ultimately these deuteriums (deuteria?) undergo further reactions and the net reaction in the Sun is:

But the good people at the National Ignition Facility cannot wait around for such slow reactions. Instead, they use heavy hydrogen (deuterium). and an even heavier hydrogen with two neutrons and proton, or “tritium”. Their net reaction is:

Before the taping of tonight’s episode, many of the crew members asked me why there was an unhappy face at the end of one of the whiteboards. Now you know why.

at the end of one of the whiteboards. Now you know why.

Last week we saw two episodes where upon our heroes’ whiteboards unfolded about making an exciting new particle, “the axion” on Earth. Could axions be made inside an artificial Sun made by the National Ignition Facility? This summer, I was excited about this, but as you already know by now from reading tonight’s whiteboards, I made a terrible, terrible mistake.

To estimate the rate of axions I used the relative power produced by the Sun versus the small compressed sample produced at the National Ignition Facility. In both cases a material is made so hot that atoms are ripped apart into their constituents: electrons and nuclei. Such a gas is called a plasma, and plasma is sometimes called “the fourth state of matter” as it is step hotter than just ordinary gas. It is not unfamiliar. The glowing orange material in a neon sign is a plasma.

The

Northern Lights are an example of a plasma you can see. (National

Geographic) The last three episode's whiteboards explored if a dense

hot plasma could make the elusive Axion particle.

The strong nuclear interaction likes to bind protons and neutrons together. And Martha Stewart says, “It’s a good thing”. Without it, the only atoms we would ever have are hydrogen. If hydrogen were our only element, we’d have an unperiodic periodic table–with only one entry, hydrogen. In real life, the nucleus of every atom is held together by this force. And its strength is impressive. For example, in helium and every element heavier the protons are repelling each other. Same-sign charges (in the protons’ case, both positive) repel with a force increasing as the square of their distance from each other decreases. A nucleus is extremely small, and those protons are so close they want to fly apart, badly. The strong interaction overcomes this repulsion and nuclei stay bound. That’s why it is called the “strong interaction” (or “strong force”).

But there’s a wrinkle. If you try to bring just two together (either protons or neutrons) there is only one combination that is stable: a pairing of one neutron and one proton. The pairing of two protons or two neutrons is not.

You

might think you the strong force could combine any pairing of protons

and neutrons. But quantum mechanics only allows a proton and neutron to

bind. The result is heavy hydrogen, or "deuterium".

The core of the Sun is full of protons but no free neutrons. So the only way to make energy from them is to convert one of those protons into a neutron so you can bind them. This bound state of a neutron and proton is still chemically hydrogen, but it has an extra neutron so it is called “heavy hydrogen”, or more technically deuterium. That’s the same “heavy” of “heavy water”. “Heavy” water is made with “heavy hydrogen”. But the reaction does not conserve electric charge so you need a light positively charged particle to fly away, and it turns out to be the anti-matter partner of an electron, which has a positive charge (e+), and so is a “positron”. But that introduces a new problem. A positron is a type of particle called “lepton” and for reasons not yet understood, you can’t vioate the number of leptons. So you also need a neutrino (ν) to be made as well to not create any net leptons. (These neutrinos were detected from the Sun over the last few decades. They changed our entire understanding of neutrinos but that’s a story for another day.) It’s easiest to see graphically:

Ultimately these deuteriums (deuteria?) undergo further reactions and the net reaction in the Sun is:

4 protons –> 1 helium nucleus (2 protons + 2 neutrons) + 2 neutrinos + lots of energy.

The released energy heats the core and makes the Sun shine. What

happened to the positrons? They are antimatter and as soon as they

find an electron (not long at all!) they annihilate into energy.The

problem is that first step: proton +proton -> proton + neutron +

positron +neutrino. To be a bound state we had to convert a proton to

a neutron. The strong interaction cannot do that, but the weak

interaction can. It is a very weak process and that’s why it is called

“the weak interaction”. It is so slow that this dominates the rate of

the total fusion in the Sun.

The rate of energy production in the Sun is so slow that

pound-for-pound you produce more energy than the Sun. Just sitting in

front of your computer, digesting your last meal, you produce about 1

Watt of power per kilogram of your body weight. The sun produces only

about 0.0002 Watts per kilogram. The Sun is just bigger. A lot

bigger. While it is tempting to think of it as a massive nuclear

furnace, it really is just smoldering. We’re lucky too. If the Sun’s

reactions were not throttled by the weak interaction we would be living

next to a nuclear bomb, not a star.But the good people at the National Ignition Facility cannot wait around for such slow reactions. Instead, they use heavy hydrogen (deuterium). and an even heavier hydrogen with two neutrons and proton, or “tritium”. Their net reaction is:

deuterium+ tritium -> helium + neutron + energy.

No neutrons or protons changed their identity. They just change who

they hang out with. This proceeds by the strong interactiona nd also

releases massive energy. This reaction is about 1025 times

faster than the proton+proton fusion in the Sun. And there’s the rub.

You can’t compare the two Sun’s directly. The boys’ calculation was

off by “only” a factor of 1025.Before the taping of tonight’s episode, many of the crew members asked me why there was an unhappy face

S05E01 & S05E02: The Skank Reflex Analysis & The Infestation Hypothesis

September 22, 2011

Some of you may be wondering why two episodes of The Big Bang

Theory were broadcast back-to-back tonight. Surely it cannot be a mere

coincidence that this is also the first time we have a multi-episode arc

on the whiteboards.

Since the beginning of the series, the executive producers have asked me to have Leonard and Sheldon working on solving a real problem on the boards over several episodes. But it wasn’t all that easy. If the boys are working on a known problem with a known solution, then anybody could answer it and spoil the surprise. But if they were working on a known problem with no known solution, there are already hundreds if not thousands of minds working on it, and how could they (meaning I) solve it by season’s end?

We needed a fresh, tractable, problem. And over the summer I had an idea. The idea would allow physicists to make a never-before seen particle. And it could solve the dark matter problem. Perhaps our galaxy is filled with these particles. They would provide the gravitational glue that keeps the galaxy rapidly spinning, but have so weakly interacting they would usually pass through the entire Earth undetected. I thought I found a new way of making a particle that was hypothesized over three decades ago, “The Axion”.

The Axions’ role in solving the dark matter problem is actually just a nice side effect. These particles were originally conceived in the late 1970’s to give a natural explanation of why the strong nuclear force (a.k.a., quantum chromodynamics) obeys certain symmetries so well-too well. It is a happy accident that axions could also account for all the dark matter in the galaxy. It solves two important unrelated problems at once and if elegance were a guide then theorists would likely consider the matter settled.

But physics is an experimental science and sheer elegance is not enough. The history of physics is filled with ideas that were simple, elegant, and wrong. Physics is an experimental science and we have to find their signature experimentally.

In very dense environments at high temperature, charged particles will start to radiate axions efficiently. The core of the Sun is over 13 million kelvins (over 23 millions degrees Farenheit) and is 150 times the density of water. As shown on the whiteboards’ Feynman diagrams, electrons in this enviroment could produce a detectable number of axions. Because they are so weak they penetrate the entire Sun, leaving in all directions. A rare few strike the Earth.

So all astrophysicists have to do is find them leaving the Sun. CERN is not only home to the Large Hadron Collider, but also a clever telescope that points at the Sun. But this is no ordinary telescope. Physicists need not only to detect these weakly interacting axions efficiently enough to find a signal, but in a way that cannot be mimicked by more mundane processes, called backgrounds. One of the funny behaviors of axions is that inside a strong, uniform magnetic field they will convert into light. Specificially, one axion will convert to one single particle of light, a photon. Because the axions are made in the heat of the core of the Sun they have an energy corresponding to 13 million kelvins. So each photon from a converted axion from the Sun will actually be an energetic X-ray.

Every morning and every evening, astrophysicists at CERN, took a prototype magnet borrowed from the Large Hadron Collider project and pointed it at the Sun. They called their device CAST, the CERN Axion Solar Telescope. If they ever see X-rays emerging from the magnetic field that would be a tell-tale sign of axions. They can check they weren’t seeing local radioactive backgrounds by pointing the telescope away from the Sun. Unfortunately to date they have seen none.

Zillions of axions are wasted in this technique. The Sun would be

pouring out axions in all directions, but only those entering the tiny

front aperture of the magnet are detectable. That’s an efficiency of

about 1 in 1025 axions. And even only a tiny fraction of these would be converted.

This summer I wondered if we could do better. The main problem is the Earth is so far from the Sun. Meanwhile physicists at Lawrence Livermore National Laboratory in California are making an artificial Sun in the laboratory. They aim 192 lasers at a small pellet of heavy water and for a short time they achieve the density and temperatures of the Sun. Exceed it, even. But not just short, but a very short time, about a hundred billionths of a second. They do this amazing feat to copy the fusion power of the Sun, as a clean almost limitless source of energy for us on earth. It is called fusion because the core of Sun converts protons into heavier elements, mostly helium. The particles are fused together into this heavier atomic nucleus, and so is called fusion. Because the resulting nucleus is less massive than the sum of the original protons, by Einstein’s famous formula E=mc2, the missing mass is converted to enormous amounts of energy.

When the process is successful, we can think of “burning” hydrogen into helium to release energy, in analogy with how a burning of a log releases energy as heat. The major difference is rather than a chemical reaction which drives fire, this is a nuclear reaction. Nuclear reactions typically release a million times more energy than chemical reactions for a given supply of fuel. The physicists at Lawrence Livermore call the successful implosions “ignition” and built the National Ignition Facility with its 192 powerful lasers to do it.

The National Ignition Facility is the prototype for what its

physicists think will be a power plant as powerful as the big coal

plants or nuclear power plants. Even a 1000 gigawatt plant is still a

lot less power than the Sun’s 1017 gigawatts, but we can put

the magnet much closer: We could put an axion telescope 10 meters away

instead of 150 billion meters away, our distance from the Earth. Since

the rate improves as the square of the distance drops, that is a

whopping improvement of 1020, more than making up for the lower power.

The numbers looked really good. I was excited. Accounting for distance and power, I reckoned I could do about 1000 times better than the CERN Axion Solar Telescope. That didn’t even account for the fact that the background would be lower since the artificial Sun is only on for 100 billionths of a second, not all day. And since the magnets don’t have to follow the Sun in the sky we could make them much larger. The emission mechanism even looked more efficient than the Sun.

But that’s not the whole story. This was actually a three-episode whiteboard arc. I suppose CBS wanted to create some suspense and the third episode will not be aired until next week. That next episode contains the result of my summer’s worth of calculations. If you think you know the answer, comment below. Otherwise, tune in next week to find out if we are on the verge of creating and detecting axions on Earth.

Since the beginning of the series, the executive producers have asked me to have Leonard and Sheldon working on solving a real problem on the boards over several episodes. But it wasn’t all that easy. If the boys are working on a known problem with a known solution, then anybody could answer it and spoil the surprise. But if they were working on a known problem with no known solution, there are already hundreds if not thousands of minds working on it, and how could they (meaning I) solve it by season’s end?

We needed a fresh, tractable, problem. And over the summer I had an idea. The idea would allow physicists to make a never-before seen particle. And it could solve the dark matter problem. Perhaps our galaxy is filled with these particles. They would provide the gravitational glue that keeps the galaxy rapidly spinning, but have so weakly interacting they would usually pass through the entire Earth undetected. I thought I found a new way of making a particle that was hypothesized over three decades ago, “The Axion”.

The Axions’ role in solving the dark matter problem is actually just a nice side effect. These particles were originally conceived in the late 1970’s to give a natural explanation of why the strong nuclear force (a.k.a., quantum chromodynamics) obeys certain symmetries so well-too well. It is a happy accident that axions could also account for all the dark matter in the galaxy. It solves two important unrelated problems at once and if elegance were a guide then theorists would likely consider the matter settled.

But physics is an experimental science and sheer elegance is not enough. The history of physics is filled with ideas that were simple, elegant, and wrong. Physics is an experimental science and we have to find their signature experimentally.

In very dense environments at high temperature, charged particles will start to radiate axions efficiently. The core of the Sun is over 13 million kelvins (over 23 millions degrees Farenheit) and is 150 times the density of water. As shown on the whiteboards’ Feynman diagrams, electrons in this enviroment could produce a detectable number of axions. Because they are so weak they penetrate the entire Sun, leaving in all directions. A rare few strike the Earth.

So all astrophysicists have to do is find them leaving the Sun. CERN is not only home to the Large Hadron Collider, but also a clever telescope that points at the Sun. But this is no ordinary telescope. Physicists need not only to detect these weakly interacting axions efficiently enough to find a signal, but in a way that cannot be mimicked by more mundane processes, called backgrounds. One of the funny behaviors of axions is that inside a strong, uniform magnetic field they will convert into light. Specificially, one axion will convert to one single particle of light, a photon. Because the axions are made in the heat of the core of the Sun they have an energy corresponding to 13 million kelvins. So each photon from a converted axion from the Sun will actually be an energetic X-ray.

Every morning and every evening, astrophysicists at CERN, took a prototype magnet borrowed from the Large Hadron Collider project and pointed it at the Sun. They called their device CAST, the CERN Axion Solar Telescope. If they ever see X-rays emerging from the magnetic field that would be a tell-tale sign of axions. They can check they weren’t seeing local radioactive backgrounds by pointing the telescope away from the Sun. Unfortunately to date they have seen none.

Not Galileo's optics: the CERN Axion Solar Telescope (CAST) is actually a large magnet pointed at the Sun.

This summer I wondered if we could do better. The main problem is the Earth is so far from the Sun. Meanwhile physicists at Lawrence Livermore National Laboratory in California are making an artificial Sun in the laboratory. They aim 192 lasers at a small pellet of heavy water and for a short time they achieve the density and temperatures of the Sun. Exceed it, even. But not just short, but a very short time, about a hundred billionths of a second. They do this amazing feat to copy the fusion power of the Sun, as a clean almost limitless source of energy for us on earth. It is called fusion because the core of Sun converts protons into heavier elements, mostly helium. The particles are fused together into this heavier atomic nucleus, and so is called fusion. Because the resulting nucleus is less massive than the sum of the original protons, by Einstein’s famous formula E=mc2, the missing mass is converted to enormous amounts of energy.

When the process is successful, we can think of “burning” hydrogen into helium to release energy, in analogy with how a burning of a log releases energy as heat. The major difference is rather than a chemical reaction which drives fire, this is a nuclear reaction. Nuclear reactions typically release a million times more energy than chemical reactions for a given supply of fuel. The physicists at Lawrence Livermore call the successful implosions “ignition” and built the National Ignition Facility with its 192 powerful lasers to do it.

The National Ignition Facility focuses 192 lasers onto a small pellet, briefly creating an artificial Sun on Earth.

The numbers looked really good. I was excited. Accounting for distance and power, I reckoned I could do about 1000 times better than the CERN Axion Solar Telescope. That didn’t even account for the fact that the background would be lower since the artificial Sun is only on for 100 billionths of a second, not all day. And since the magnets don’t have to follow the Sun in the sky we could make them much larger. The emission mechanism even looked more efficient than the Sun.

But that’s not the whole story. This was actually a three-episode whiteboard arc. I suppose CBS wanted to create some suspense and the third episode will not be aired until next week. That next episode contains the result of my summer’s worth of calculations. If you think you know the answer, comment below. Otherwise, tune in next week to find out if we are on the verge of creating and detecting axions on Earth.

S04E20: The Herb Garden Germination

April 5, 2011

Tonight is our first ever guest post. It is by my close friend Kristina Lerman.

Kristina and I met the first week in freshman year, where we were both

physics majors. We spent many years together working on problem sets —

which is how physicists like to spend their twenties. After getting

her Ph.D. from University of California, Santa Barbara in physics,

Kristina became an expert in the mathematics of networks, especially

online networks, long before “social networking” became a buzz word. So

when there was a line in tonight’s script on meme theory by Amy Farrah

Fowler, I immediately called Kristina for help. Now she’s been kind

enough to explain to us the science behind tonight’s episode. So

without further ado…

——————————–

(By Kristina Lerman)

The idea that information moves through a social group like an infectious disease has itself proved to be a powerful meme. This analogy has informed sociologists’ attempts to understand many diverse phenomena, including adoption of innovations, the spread of fads and fashion, word-of-mouth recommendations, and social media campaigns. The analogy becomes even stronger when social interactions are encoded within a friendship graph, the so-called social network. In a social epidemic each informed, or “infected,” individual infects her network neighbors with some probability given by the transmissibility, which measures how contagious the infection is. Understanding social epidemics is crucial to identifying influential people, predicting how far epidemics will spread, and identifying methods to enhance or impede its progress. Advertisers and social media consultants have been busy devising “viral” marketing strategies. Much like an epidemiologist might advise people on ways to reduce the transmissibility of a virus (wash hands), or if that fails, figure out who should be vaccinated to limit its spread (kindergarden teachers in many cases), marketing types are interested in identifying individuals who will generate the greatest buzz if they receive free products and other incentives.

Though theoretical progress has been brisk, until recently, empirical studies of epidemics were limited to taking case histories of sick people and attempting to trace their contacts. The advent of social media has changed that. People are joining social media sites such Twitter, Digg, Flickr, and YouTube to find interesting content and connect with friends and like-minded people through online social networks. Traces of human activity that are exposed by the sites have given scientists treasure troves of data about individual and group behavior. This data has given social science an empirical grounding that many physicists find irresistible. As a result, physicists (author included) have flooded the field, much to the chagrin of practicing social scientists. In the culture wars of science, physicists often come off as arrogant, like Sheldon, but that is the price of being right.

The detailed data about human behavior on social media sites has allowed us to quantitatively study dynamics of social epidemics. In my own work I study how information spreads on Digg and Twitter. These sites allow users to add friends to their social network whose activities they want to follow. A user becomes infected by voting for (digging) or tweeting a story and exposes her network neighbors to it. Each neighbor may in turn become infected (i.e., vote or retweet), exposing her own neighbors to it, and so on. This way interest in a story cascades through the network. This data enables us to trace the flow of information along social links. We found that social epidemics look and spread very differently from diseases on networks. Contrary to our expectations, the vast majority of information cascades grew slowly and failed to reach“epidemic” proportions. In fact, on Digg, these cascades reached fewer than 1% of users.

There are a number of factors that could explain this observation. Perhaps users modulate transmissibility of stories to be within a narrow range of threshold to prevent information overload. Perhaps the structure of the network (e.g., clustering or communities) limits the spread of information. Or it could be that the mechanism of social contagion, in other words, how people decide to vote for a story once their friends voted for it, prevents interest in stories from growing. We examined these hypotheses through simulations of epidemic processes on networks and empirical study of real information cascades.

We found that while network structure somewhat limits the growth of cascades, a far more dramatic effect comes from the social contagion mechanism. Unlike the standard models of disease spread used in previous works on epidemics, repeated exposure to the same story does not make the user more likely to vote for it. We defined an alternative contagion mechanism that fits empirical observations and showed that it reproduces the observed properties of real information cascades on Digg.

(Longer version: Specifically, we simulated the independent cascade model that is widely used to study epidemics on networks. Each simulated cascade began with a single seed node who voted for a story. By analogy with epidemic processes, we call this node infected. The susceptible followers of the seed node decide to vote on the story with some probability given by the transmissibility, λ (lambda). Every node can vote for the story once, so at this point the seed node is removed, and we repeat the process with the newly infected nodes. A node who is following n voting nodes has n independent chances to decide to vote. Intuitively, this assumption implies that you are more likely to become infected if many of your friends are infected. )

After some time, no new nodes are infected, and the cascade stops.

The final number of infected nodes gives cascade size. These are shown

in the figure above, where each point represents a single cascade with

the y-axis giving the final cascade size and the x-axis giving the

transmissibility, λ. Blue dots represent cascades on

the original Digg graph, while pink dots represent cascades on a

randomized version of the Digg graph, and gold line gives theoretical

predictions. In both simulations, there exists a critical value of λ, the epidemic threshold, below which cascades quickly die out and above which they spread to a significant fraction of the graph.

Comparing the theoretical and simulation results to real cascades presents a puzzle. Why are cascades so small? According to our cascade model, only transmissibilities in a very narrow range near the threshold produce cascades of the appropriate size of ~500 votes. Clearly, the structure is not enough to explain the difference. To delve deeper, we looked at the contagion mechanism itself. We measured the probability that a Digg user votes for a story given than n of his friends have voted. We found that independent cascade model grossly overestimates the probability of a vote even with 2 or 3 voting friends. In fact, we found that multiple exposures to a story only marginally increase the probability of voting for it.

After simulating information cascades using the new contagion mechanism, we found that their size is an order of magnitude smaller than before, as shown in the figure above. The size of the real Digg cascades is similar to the simulated cascades, giving us confidence that we have uncovered the mechanism that limits the spread of information. These findings underscore the fundamental difference between the spread information and disease: despite multiple opportunities for infection within a social group, people are less likely to become spreaders of information with repeated exposure.

——————————–

(By Kristina Lerman)

AMY: Meme theory suggests items of gossip are like living organisms that seek to reproduce using humans as their hosts.In this episode, Sheldon and Amy discover that memes, or items of gossip and other information, are like infectious organisms that reproduce themselves using humans as hosts. They engage in a bit of “memetic epidemiology” as they conduct social experiments on their friends to test the theory that tantalizing pieces of gossip make stronger, more virulent memes that spread faster and farther among their friends than mundane pieces of information.

The idea that information moves through a social group like an infectious disease has itself proved to be a powerful meme. This analogy has informed sociologists’ attempts to understand many diverse phenomena, including adoption of innovations, the spread of fads and fashion, word-of-mouth recommendations, and social media campaigns. The analogy becomes even stronger when social interactions are encoded within a friendship graph, the so-called social network. In a social epidemic each informed, or “infected,” individual infects her network neighbors with some probability given by the transmissibility, which measures how contagious the infection is. Understanding social epidemics is crucial to identifying influential people, predicting how far epidemics will spread, and identifying methods to enhance or impede its progress. Advertisers and social media consultants have been busy devising “viral” marketing strategies. Much like an epidemiologist might advise people on ways to reduce the transmissibility of a virus (wash hands), or if that fails, figure out who should be vaccinated to limit its spread (kindergarden teachers in many cases), marketing types are interested in identifying individuals who will generate the greatest buzz if they receive free products and other incentives.

Though theoretical progress has been brisk, until recently, empirical studies of epidemics were limited to taking case histories of sick people and attempting to trace their contacts. The advent of social media has changed that. People are joining social media sites such Twitter, Digg, Flickr, and YouTube to find interesting content and connect with friends and like-minded people through online social networks. Traces of human activity that are exposed by the sites have given scientists treasure troves of data about individual and group behavior. This data has given social science an empirical grounding that many physicists find irresistible. As a result, physicists (author included) have flooded the field, much to the chagrin of practicing social scientists. In the culture wars of science, physicists often come off as arrogant, like Sheldon, but that is the price of being right.

The detailed data about human behavior on social media sites has allowed us to quantitatively study dynamics of social epidemics. In my own work I study how information spreads on Digg and Twitter. These sites allow users to add friends to their social network whose activities they want to follow. A user becomes infected by voting for (digging) or tweeting a story and exposes her network neighbors to it. Each neighbor may in turn become infected (i.e., vote or retweet), exposing her own neighbors to it, and so on. This way interest in a story cascades through the network. This data enables us to trace the flow of information along social links. We found that social epidemics look and spread very differently from diseases on networks. Contrary to our expectations, the vast majority of information cascades grew slowly and failed to reach“epidemic” proportions. In fact, on Digg, these cascades reached fewer than 1% of users.

There are a number of factors that could explain this observation. Perhaps users modulate transmissibility of stories to be within a narrow range of threshold to prevent information overload. Perhaps the structure of the network (e.g., clustering or communities) limits the spread of information. Or it could be that the mechanism of social contagion, in other words, how people decide to vote for a story once their friends voted for it, prevents interest in stories from growing. We examined these hypotheses through simulations of epidemic processes on networks and empirical study of real information cascades.

We found that while network structure somewhat limits the growth of cascades, a far more dramatic effect comes from the social contagion mechanism. Unlike the standard models of disease spread used in previous works on epidemics, repeated exposure to the same story does not make the user more likely to vote for it. We defined an alternative contagion mechanism that fits empirical observations and showed that it reproduces the observed properties of real information cascades on Digg.

(Longer version: Specifically, we simulated the independent cascade model that is widely used to study epidemics on networks. Each simulated cascade began with a single seed node who voted for a story. By analogy with epidemic processes, we call this node infected. The susceptible followers of the seed node decide to vote on the story with some probability given by the transmissibility, λ (lambda). Every node can vote for the story once, so at this point the seed node is removed, and we repeat the process with the newly infected nodes. A node who is following n voting nodes has n independent chances to decide to vote. Intuitively, this assumption implies that you are more likely to become infected if many of your friends are infected. )

Cascade

size as a function of transmissibility λ (lambda) for simulated

cascades on the Digg graph and the randomized graph with the same degree

distribution. Heterogeneous mean field predicts cascade size as a

fraction of the nodes affected. The line (hmf) reports these predictions

multiplied by the total number of nodes in the Digg network.

Comparing the theoretical and simulation results to real cascades presents a puzzle. Why are cascades so small? According to our cascade model, only transmissibilities in a very narrow range near the threshold produce cascades of the appropriate size of ~500 votes. Clearly, the structure is not enough to explain the difference. To delve deeper, we looked at the contagion mechanism itself. We measured the probability that a Digg user votes for a story given than n of his friends have voted. We found that independent cascade model grossly overestimates the probability of a vote even with 2 or 3 voting friends. In fact, we found that multiple exposures to a story only marginally increase the probability of voting for it.

After simulating information cascades using the new contagion mechanism, we found that their size is an order of magnitude smaller than before, as shown in the figure above. The size of the real Digg cascades is similar to the simulated cascades, giving us confidence that we have uncovered the mechanism that limits the spread of information. These findings underscore the fundamental difference between the spread information and disease: despite multiple opportunities for infection within a social group, people are less likely to become spreaders of information with repeated exposure.

S04E19: The Zarnecki Incursion

April 2, 2011

(***SPOILER ALERT**** If you have not seen the episode yet, you

may not want to read this post, which includes a minor spoiler.)

In this latest episode, the boys know how an internal combustion engine works. Let’s learn how it works, and maybe it will be as useful someday to you as it was for them.

Not only biologists do dissections. When I was in college, we dissected an internal combustion engine. Not only was it easily as educational as slicing up a frog, but also it had the advantage of not smelling of formaldehyde and not feeling really bad for a frog.

But first let’s dissect the phrase itself: “internal combustion engine”.

A motor any machine that converts stored energy into useful mechanical motion, or as a physicist would say, work. Even a simple rower with an oar is converting his recent meal into motion of a boat and is a motor. But typically if the device starts with heat energy, as opposed to electricity or other stored power, we specifically call the motor an engine.

Another word for burning is combustion. That provides the heat for our engine. A burning log releases heat energy. But fossil fuels such as gasoline and natural gas are able to produce more heat per gram through combustion than nearly any other substance. The only exception is hydrogen, producing three times more energy per gram through combustion than methane or gasoline. Given that the cost of lifting jet fuel is a major expense for flying an airplane, I don’t know why airplanes don’t use hydrogen fuel.

Typically the mechanical work is first performed by a fluid, such as steam or a hot gas. When the fluid that is heated is separate from what provides the heat, it is called an external combustion engine. For example, in a steam engine, wood or some material is burned, which in turn heats the steam which is pressed into service. But in an internal combustion engine the same fluid that was burned does the work. The simplicity leads to an economy of parts and efficiency.

To make a long story short, if you put a small amount of explosive fuel and air in a small volume and ignite it, a large amount of energy is released as expanding gas. If you are clever enough to do work with this gas, you have built an engine.

Such is the role of the piston. When the gas explodes, it pushes the piston and does work. But that’s not the whole story of the piston. The piston and little ports called valves perform a simple dance that performs all the functions of an internal combustion engine. The engine we dissected in college was called a two-stroke engine because it performed all its work in just two steps. But far more common, and used in automobiles, is the four-stroke engine which is even easier to understand.

The four steps of the dance are:

Stroke 1: (the “Intake stroke“) The piston pulls back just as the valve opens to a source of fuel and air, usually already mixed just right. The pulling back of the piston fills the cylinder with explosive gas through the hole left by the open valve.

Stroke 2: (the “Compression Stroke“) The valve closes and the piston moves forward. This compresses the gas, but more importantly puts the piston in position to be moved outward by the upcoming explosion.

Stroke 3: (the “Power Stroke“) The fuel/air mixture is ignited with a spark and the piston is pushed outward with an enormous force. This is the point in the cycle that produces useful mechanical work. In a car the moving piston turns a shaft called the crank shaft so that the motion motion of the piston quickly becomes rotational energy. The car itself works with energies stored as rotations, eventually turning the wheels. The wheels turn against the road, and the force of friction between the tire and the road pushes the car forward (or backward if your transmission is in a reverse gear.)

Stroke 4: (the “Exhaust Stroke“) A different valve opens so the burned gases can be expelled. This is the exhaust.

Notice that the piston goes in and out of the cylinder twice, while only producing work once.

The process repeats itself thousands of times per minute. Typically each piston is in a different part of the cycle so that the piston doing the work (expansion stroke) can move the other pistons to do their job on each stroke. The crankshaft turns while the pistons go in and out. Such motion of the pistons is called reciprocating, and often this kind of engine is called a reciprocating engine.

It works fine once it is going, but getting it started is the trick. Anyone that has turned on the ignition many times on a winter morning knows how hard this can be. Or you might be faced with pulling the ignition cord on a lawnmower.

Any leaks around the piston are bad news. It will cause a loss of compression, perhaps causing Leonard’s problem. Meanwhile oil around the crankshaft can leak into the combustion cylinder and burn, producing smoke and loss of oil.

If Leonard’s problem was his car lost too much oil, the damage to the engine means we may not be seeing him drive it ever again.

In this latest episode, the boys know how an internal combustion engine works. Let’s learn how it works, and maybe it will be as useful someday to you as it was for them.

Not only biologists do dissections. When I was in college, we dissected an internal combustion engine. Not only was it easily as educational as slicing up a frog, but also it had the advantage of not smelling of formaldehyde and not feeling really bad for a frog.

Just

like dissecting a frog, dissecting a model airplane engine is a

terrific way to learn about how the engine (instead of frog) works.

A motor any machine that converts stored energy into useful mechanical motion, or as a physicist would say, work. Even a simple rower with an oar is converting his recent meal into motion of a boat and is a motor. But typically if the device starts with heat energy, as opposed to electricity or other stored power, we specifically call the motor an engine.

Another word for burning is combustion. That provides the heat for our engine. A burning log releases heat energy. But fossil fuels such as gasoline and natural gas are able to produce more heat per gram through combustion than nearly any other substance. The only exception is hydrogen, producing three times more energy per gram through combustion than methane or gasoline. Given that the cost of lifting jet fuel is a major expense for flying an airplane, I don’t know why airplanes don’t use hydrogen fuel.

Typically the mechanical work is first performed by a fluid, such as steam or a hot gas. When the fluid that is heated is separate from what provides the heat, it is called an external combustion engine. For example, in a steam engine, wood or some material is burned, which in turn heats the steam which is pressed into service. But in an internal combustion engine the same fluid that was burned does the work. The simplicity leads to an economy of parts and efficiency.

To make a long story short, if you put a small amount of explosive fuel and air in a small volume and ignite it, a large amount of energy is released as expanding gas. If you are clever enough to do work with this gas, you have built an engine.

Such is the role of the piston. When the gas explodes, it pushes the piston and does work. But that’s not the whole story of the piston. The piston and little ports called valves perform a simple dance that performs all the functions of an internal combustion engine. The engine we dissected in college was called a two-stroke engine because it performed all its work in just two steps. But far more common, and used in automobiles, is the four-stroke engine which is even easier to understand.

A piston for a Chevy engine. The piston converts the energy of the exploding gas into mechanical energy.

Stroke 1: (the “Intake stroke“) The piston pulls back just as the valve opens to a source of fuel and air, usually already mixed just right. The pulling back of the piston fills the cylinder with explosive gas through the hole left by the open valve.

Stroke 2: (the “Compression Stroke“) The valve closes and the piston moves forward. This compresses the gas, but more importantly puts the piston in position to be moved outward by the upcoming explosion.

Stroke 3: (the “Power Stroke“) The fuel/air mixture is ignited with a spark and the piston is pushed outward with an enormous force. This is the point in the cycle that produces useful mechanical work. In a car the moving piston turns a shaft called the crank shaft so that the motion motion of the piston quickly becomes rotational energy. The car itself works with energies stored as rotations, eventually turning the wheels. The wheels turn against the road, and the force of friction between the tire and the road pushes the car forward (or backward if your transmission is in a reverse gear.)

Stroke 4: (the “Exhaust Stroke“) A different valve opens so the burned gases can be expelled. This is the exhaust.

Notice that the piston goes in and out of the cylinder twice, while only producing work once.

The process repeats itself thousands of times per minute. Typically each piston is in a different part of the cycle so that the piston doing the work (expansion stroke) can move the other pistons to do their job on each stroke. The crankshaft turns while the pistons go in and out. Such motion of the pistons is called reciprocating, and often this kind of engine is called a reciprocating engine.

It works fine once it is going, but getting it started is the trick. Anyone that has turned on the ignition many times on a winter morning knows how hard this can be. Or you might be faced with pulling the ignition cord on a lawnmower.

Any leaks around the piston are bad news. It will cause a loss of compression, perhaps causing Leonard’s problem. Meanwhile oil around the crankshaft can leak into the combustion cylinder and burn, producing smoke and loss of oil.

If Leonard’s problem was his car lost too much oil, the damage to the engine means we may not be seeing him drive it ever again.

S04E18: The Prestidigitation Approximation

March 13, 2011

This week, Sheldon could not figure out Howard’s card trick

using math. But that was fiction. Playing cards and mathematics go

hand in hand. In real life, Sheldon’s analysis may well have worked.

How many ways are there to shuffle a deck of cards? This question is a classic example of a branch of mathematics called combinatorics. The first card can be any, or 1 of 52 possibilities. The second card is more constrained because one card has already been chosen. So it has 1 of 51 possibilities. Now suppose you are asking what is the number of ways the first card is what it is AND that the second card is what it is. In this case AND means multiply the possibilities, so there are 52*51=2,652 different ways to shuffle the first two cards.

Calculating for the rest of the deck is, the combinations are just 52*51*50*40*….*1. The last card has no choice given that it is the only card left so is a factor of “1”. Mathematicians have a shorthand for this result called 52!, or 52 factorial. It is a number so large that even typing it into google won’t give all the digits, even though it is far less than a googol. Instead you can find it on Sheldon’s board:

(The last 12 zeroes are not an approximation. There are five tens in

between 1 and 52. And the five multiples of 5 always find a multiple

of 2 to give another five factors of ten. Since 25 and 50 contribute

two extra factors of 5 that find factors of 2, that is why there are 12

zeroes at the end.)

More compactly, this is 8*1067. Or in plain English, the number is “80 unvigintillion”. The British have a different word for it, “80 undecillion” but they don’t even get “a billion” right. The Brits traditionally call a “trillion” a “billion” because they skip over “billion” as a “thousand million”. Since they don’t call a million a “thousand thousand”, consistency is apparently not their strong suit.

(To be fair, the UK switched from this so-called “long-scale” naming convention to the “short scale” one used by the US and others in 1974, but the long scale persists in some countries. And to think they scoff at Americans for not using the metric system.)

In any language it is still a large number. Even if every one of the 7 billion men, women, and children on Earth played one billion card games every year for a billion years, they would not even make a dent in the number of possible shuffled decks of cards.

We may well ask a different question. Has there likely ever been two games played from the same shuffled deck? That is a different exercise in combinatorics. The chance is much higher. The answer is not just the number of games ever played divided by that big number. An example of this effect is the classic question: “What is the chance that in a room of 23 people, two have the same birthday?” You might naively guess 23/365 or 6%. But it turns out to be greater than a 50% chance. Among 57 people, there is a 99% chance that two have the same birthday. Since twins sometimes hang out together and other correlations may be found, the real chance is much higher, but we are assuming everyone’s birthday in the room is independent.

If a 5o% chance seems surprisingly large for one pair among 23 people to have the same birthday, remember we haven’t chosen which day. We are not asking the chance they have your birthday or Britney’s birthday, but that any pair has the same birthday, no matter what day. Luckily, the same question can be asked a more illuminating, way: What is the probability that nobody in the group has the same birthday as each another? If there is 50% chance that none have the same birthday, then there is a 50% some pair does.

Phrased the second way, the odds are more easily calculated. The first person has a no chance of matching on his own with anyone else. The second still has 364 out of 365 chances of not matching. The third has 363 chances in 365. And so on. Multiplying these 23 factors:

(The occasional presence of February 29, would not change our result much and I ignored the five million people born on that day.)

So back to our problem of what is the chance that no two card games have ever started with the same deck. I’ll start with assumption that every deck was well enough shuffled that they were all independent. Given the number of drunk poker players and the fact that every purchased new deck starts out the same, I know this is a poor assumption. Repeating the birthday calculation for decks of cards — using the big number above — is left for an exercise for the comments.

Martin Gardner wrote an article every month about “recreational mathematics” (Yes, there is such a thing) for the popular magazine Scientific American. Mathematics of card tricks was an expertise of Gardner, just as Sheldon was reckoning on the white boards. Many tricks were published in his 1956 book Mathematics, Magic and Mystery. Here’s one of Martin Gardner’s simpler card tricks based on mathematics that you can now use to amaze and entertain your friends for hours.

Start with The Cyclic Number trick, which Gardner attributes to Mr.

Lloyd Jones of Oakland California (1942). Give your spectator five red

cards: 2, 3, 4, 5, and 6. Keep for yourself six black cards: A, 4, 2,8,

5, 7. The magician deals out his cards in a row and has his spectator

write down the number: 142857. The player picks one of his cards, say

5, and multiplies the two on a sheet of paper giving in this case

714285. The magician picks up his cards, gives a quick cut and lo and

behold, deals out the six cards in order corresponding to the

spectator’s multiplication: 714285.

The trick rests on the fact that the number 142857 is cyclic. Multiply it by any number and the result will be the same numbers, in the same order. What else would you expect the reciprocal of a prime number, in this case seven, to do? Math (and a little dexterity faking the cuts and shuffles) is all you need.

Using the mathematical structure of a deck (4 suits, 13 types of cards) leads to a variety of more complex games, including modular arithmetic which was the reason for “mod 4” and “mod 13” on the boards. Pick up Gardner’s book and enjoy.

Sorry this blog post is late because I never did figure out the math behind Howard’s trick.

How many ways are there to shuffle a deck of cards? This question is a classic example of a branch of mathematics called combinatorics. The first card can be any, or 1 of 52 possibilities. The second card is more constrained because one card has already been chosen. So it has 1 of 51 possibilities. Now suppose you are asking what is the number of ways the first card is what it is AND that the second card is what it is. In this case AND means multiply the possibilities, so there are 52*51=2,652 different ways to shuffle the first two cards.

Calculating for the rest of the deck is, the combinations are just 52*51*50*40*….*1. The last card has no choice given that it is the only card left so is a factor of “1”. Mathematicians have a shorthand for this result called 52!, or 52 factorial. It is a number so large that even typing it into google won’t give all the digits, even though it is far less than a googol. Instead you can find it on Sheldon’s board:

- The number on Sheldon’s board. The number of ways to shuffle a deck of playing cards.

More compactly, this is 8*1067. Or in plain English, the number is “80 unvigintillion”. The British have a different word for it, “80 undecillion” but they don’t even get “a billion” right. The Brits traditionally call a “trillion” a “billion” because they skip over “billion” as a “thousand million”. Since they don’t call a million a “thousand thousand”, consistency is apparently not their strong suit.

(To be fair, the UK switched from this so-called “long-scale” naming convention to the “short scale” one used by the US and others in 1974, but the long scale persists in some countries. And to think they scoff at Americans for not using the metric system.)

In any language it is still a large number. Even if every one of the 7 billion men, women, and children on Earth played one billion card games every year for a billion years, they would not even make a dent in the number of possible shuffled decks of cards.

We may well ask a different question. Has there likely ever been two games played from the same shuffled deck? That is a different exercise in combinatorics. The chance is much higher. The answer is not just the number of games ever played divided by that big number. An example of this effect is the classic question: “What is the chance that in a room of 23 people, two have the same birthday?” You might naively guess 23/365 or 6%. But it turns out to be greater than a 50% chance. Among 57 people, there is a 99% chance that two have the same birthday. Since twins sometimes hang out together and other correlations may be found, the real chance is much higher, but we are assuming everyone’s birthday in the room is independent.

If a 5o% chance seems surprisingly large for one pair among 23 people to have the same birthday, remember we haven’t chosen which day. We are not asking the chance they have your birthday or Britney’s birthday, but that any pair has the same birthday, no matter what day. Luckily, the same question can be asked a more illuminating, way: What is the probability that nobody in the group has the same birthday as each another? If there is 50% chance that none have the same birthday, then there is a 50% some pair does.

Phrased the second way, the odds are more easily calculated. The first person has a no chance of matching on his own with anyone else. The second still has 364 out of 365 chances of not matching. The third has 363 chances in 365. And so on. Multiplying these 23 factors:

(365/365)* (364/365)* (363/365)* (362/365)* (361/365)* (360/365)* (359/365)* (358/365)* (357/365)* (356/365)* (355/365)* (354/365)* (353/365)* (352/365)* (351/365)* (350/365)* (349/365)* (348/365)* (347/365)* (346/365)* (345/365)* (344/365)* (343/365)That is a 49% chance no pair has the same birthday. And hence, 51% do.

= 0.49

(The occasional presence of February 29, would not change our result much and I ignored the five million people born on that day.)

So back to our problem of what is the chance that no two card games have ever started with the same deck. I’ll start with assumption that every deck was well enough shuffled that they were all independent. Given the number of drunk poker players and the fact that every purchased new deck starts out the same, I know this is a poor assumption. Repeating the birthday calculation for decks of cards — using the big number above — is left for an exercise for the comments.

Martin Gardner wrote an article every month about “recreational mathematics” (Yes, there is such a thing) for the popular magazine Scientific American. Mathematics of card tricks was an expertise of Gardner, just as Sheldon was reckoning on the white boards. Many tricks were published in his 1956 book Mathematics, Magic and Mystery. Here’s one of Martin Gardner’s simpler card tricks based on mathematics that you can now use to amaze and entertain your friends for hours.

The trick rests on the fact that the number 142857 is cyclic. Multiply it by any number and the result will be the same numbers, in the same order. What else would you expect the reciprocal of a prime number, in this case seven, to do? Math (and a little dexterity faking the cuts and shuffles) is all you need.